We’ve been rapidly iterating and improving Cloud the last several weeks, and with the pace of releases, it was time to start removing some of our recent temporary flags. Despite carefully planning for this, creating a spreadsheet to share with the team verifying which flags were ready for cleanup, tagging them in Cloud, and triple-checking that the relevant flags were no longer present on our main branch, I still screwed it up.

So what happened?

- I opened a pull request that removed the tagged feature flags from the code.

- I triple-checked each flag individually before merging into

mainand deploying to staging. - After manually verifying each flag was removed, I forgot to promote the code to production.

- I archived the feature flags.

- Flipper returned

falsefor all enabled checks of the missing flags.

So production was still checking those handful of recent feature flags, but they were always returning false because they had been archived. One of those flags was :docs, and so our documentation was temporarily offline. 🤦🏻♂️

Fortunately, it was a fairly small snafu. But this mistake simply served as additional reinforcement of something we've been working on: Flipper Cloud needs guardrails. For example—hypothetically—it shouldn't be easy to delete a feature flag that is still being checked in production! (We're working on multiple solutions for this, but more on that later.)

I’ve been advocating for additional guardrails since I started working with Flipper. We’re working on those improvements, but they’re still in the process of planning and development.

You see, the rest of the team has been using feature flags for years, starting from their time at GitHub and then to subsequent projects using Flipper Cloud—including Flipper Cloud. I, on the other hand, am blissfully ignorant and inexperienced with feature flag nuances. While I’ve been deploying web applications for well over 20 years, I’m relatively fresh when it comes to formalized feature flags.

As I’m learning my way around, I make sure to share when it seems like there are opportunities to further reduce risk and ensure that feature flags can be proactively cleaned up and safely removed. But despite an acute awareness of the potential pitfalls and layering on some extra caution while I build up experience developing, releasing, and cleaning up feature flags, there’s clearly room for some additional guardrails—even for us.

Generally speaking, we have a pretty standard release process for a small team. We use pull requests, and usually nothing goes out without at least one other team member reviewing it. When pull requests are merged, they automatically release to staging where we verify and test manually before promoting the changes to production.

According to Heroku, I’ve deployed Flipper Cloud almost 150 times over the last several months. So the process isn’t new to me, but this was the first time I was starting to clean up our temporary feature flags.

Early Improvements to Support Cleanup

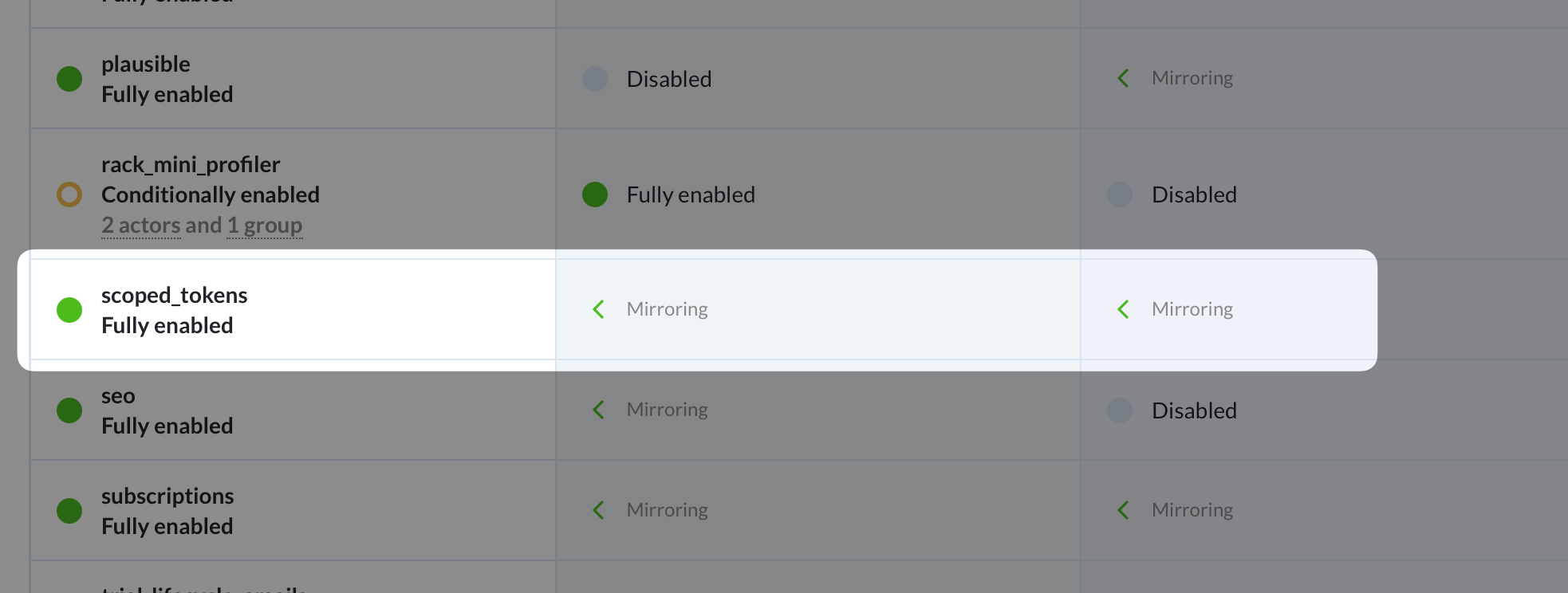

But, we’re not just aware of the value of additional guardrails, we’re actively working on it from several angles in conjunction with a handful of additional functionality. Our recently-released project overview was one of the first steps to helping teams identify flags that are good candidates for clean up.

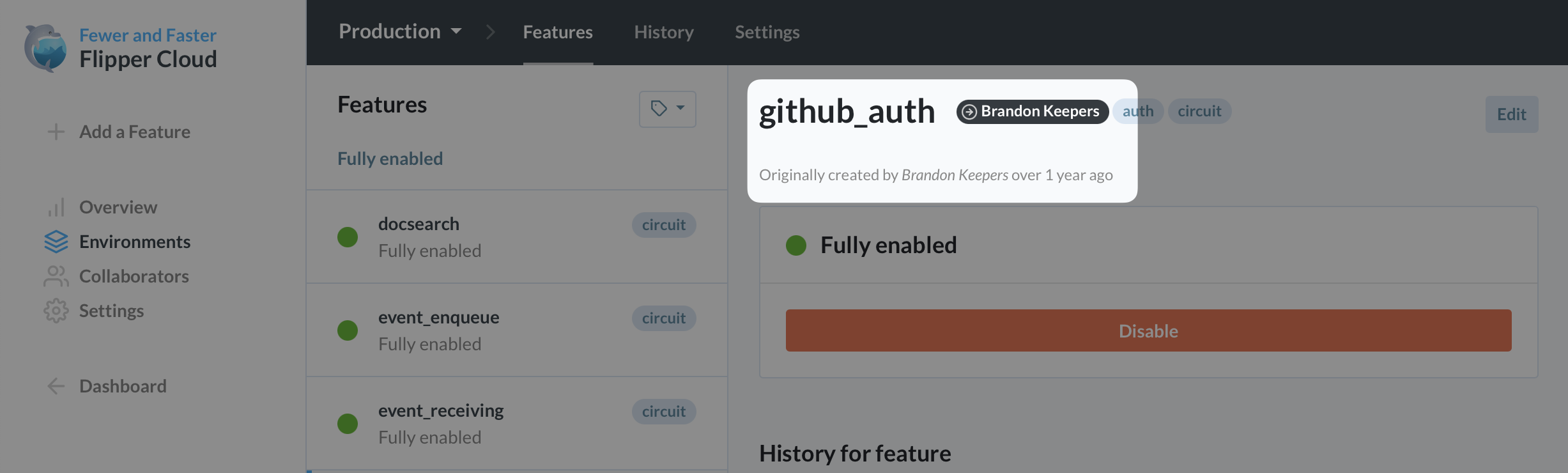

We also started surfacing information about each feature's creator and enabled the ability to specify feature owners so it’s easier for teams to keep track of the team member most knowledgeable of the current status of a flag. But this is all just the beginning. We still need to layer on additional guidance and protections.

Further Feature Flag Safety Mechanisms

Internally, we’re really excited about recording client-side analytics so we can provide even more robust advice about which feature flags can—and should—be safely removed. Currently, Flipper Cloud is designed so that applications are fully-insulated from any downtime in Flipper Cloud. With all flags stored locally on your servers, the checks are faster and don’t need a connection to Cloud.

That’s great for performance, but it also means we don’t receive any low-level data on feature flags. Instead, the local instances of Flipper pull down all of the flag updates collectively—either on a regular basis or when notified of changes via webhooks. So while we know when we’ve pushed a batch of updated feature flag data to your servers, we don’t know how frequently a specific feature flag was accessed in a given environment or when it was last accessed because that process happens locally.

Once we wrap up analytics, Flipper will be able to record, rollup, and report more granular feature flag usage data back to Flipper Cloud. With that, we’ll be able to provide more helpful insights about when a given flag is stable enough to remove, and, more importantly, when it is safe to remove. We can potentially even block a flag from being removed until we haven't seen it in production for a set amount of time.

We’re also exploring some approaches to help teams explicitly specify when flags are ready for removal by marking them as deprecated or removable as well as linting to help proactively warn or raise errors when there's a problem. Combined with data about when we last saw a flag in a given environment, these will go a long ways towards helping avoid the kind of mistake I made.

There’s plenty more, but given the customer requests and tripping over these shortcomings ourselves, this felt like a good opportunity to share some hard-earned experience and some of the ways we’re working on to make sure it’s not as easy for others to make these kinds of mistakes.